Thursday, 4 June 2015

Small and Medium Businesses Hit Hardest By Mobilegeddon

Your Daily SEO Fix: Week 3

Posted by Trevor-Klein

Welcome to the third installment of our short (< 2-minute) video tutorials that help you all get the most out of Moz's tools. Each tutorial is designed to solve a use case that we regularly hear about from Moz community members—a need or problem for which you all could use a solution.

If you missed the previous roundups, you can find 'em here:

- Week 1: Reclaim links using Open Site Explorer, build links using Fresh Web Explorer, and find the best time to tweet using Followerwonk.

- Week 2: Analyze SERPs using new MozBar features, boost your rankings through on-page optimization, check your anchor text using Open Site Explorer, do keyword research with OSE and the keyword difficulty tool, and discover keyword opportunities in Moz Analytics.

Today, we've got a brand-new roundup of the most recent videos:

- How to Compare Link Metrics in Open Site Explorer

- How to Find Tweet Topics with Followerwonk

- How to Create Custom Reports in Moz Analytics

- How to Use Spam Score to Identify High-Risk Links

- How to Get Link Building Opportunities Delivered to Your Inbox

Hope you enjoy them!

Fix 1: How to Compare Link Metrics in Open Site Explorer

Not all links are created equal. In this Daily SEO Fix, Chiaryn shows you how to use Open Site Explorer to analyze and compare link metrics for up to five URLs to see which are strongest.

Fix 2: How to Find Tweet Topics with Followerwonk

Understanding what works best for your competitors on Twitter is a great place to start when forming your own Twitter strategy. In this fix, Ellie explains how to identify strong-performing tweets from your competitors and how to use those tweets to shape your own voice and plan.

Fix 3: How to Create Custom Reports in Moz Analytics

In this Daily SEO Fix, Kevin shows you how to create a custom report in Moz Analytics and schedule it to be delivered to your inbox on a daily, weekly, or monthly basis.

Fix 4: How to Use Spam Score to Identify High-Risk Links

Almost every site has a few bad links pointing to it, but lots of highly risky links can have a negative impact on your search engine rankings. In this fix, Tori shows you how to use Moz’s Spam Score metric to identify spammy links.

Fix 5: How to Get Link Building Opportunities Delivered to Your Inbox

Building high-quality links is one of the most important aspects of SEO. In this Daily SEO Fix, Erin shows you how to use Moz Analytics to set up a weekly custom report that will notify you of pages on the web that mention your site but do not include a link, so you can use this info to build more links.

Looking for more?

We've got more videos in the previous two weeks' round-ups!

Don't have a Pro subscription? No problem. Everything we cover in these Daily SEO Fix videos is available with a free 30-day trial.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

Google Tone: Noisy URL Sharing From Google

Google are forever playing around with their new acquisitions to create new tools and toys for the rest of us. One idea, built in a single afternoon for fits and giggles, is Google Tone.

Before long, this fun little idea was being used regularly around the Google offices, and a new publicly available extension was born…

What Is It?

While technology is meant to, and largely does, make life easier, in some ways sharing is still a bit of a pain. There are so many ways to share, you may well have favourite platforms depending on the type of information you are sending. Almost all of them will require you to go through a number of steps to send them. Part of the attraction of Tone is that, for those recipients who are near to you at least, sharing is a simple one click action.

‘Broadcast any URL to computers within earshot‘. Yes really. Seemingly ridiculous but perfectly workable, Google Tone allows any URL to be sent from one device to another via a short stream of cheery computer noises.

In Google’s words:

“Sometimes in the course of exploring new ideas, we’ll stumble upon a technology application that gets us excited. Tone is a perfect example: it’s a Chrome extension that broadcasts the URL of the current tab to any machine within earshot that also has the extension installed. Tone is an experiment that we’ve enjoyed and found useful, and we think you may as well.”

What Can I Send?

When they say ‘any URL’, it is quite true. Anything from pages, to video, to GIFs and search results can be sent through the aural ether with the click of a button to another computer or phone, or even during how Hangouts, as long as the intended recipient has the Tone extension added and switched on.

How Does It Work?

Alex Kauffman has created this 0:26 video which shows us how simple Google Tone is to set up and use:

Firstly, download the Google Tone extension.

When you are looking at a page you want to share with other Tone users in the vicinity, simply click the Tone icon in your toolbar:

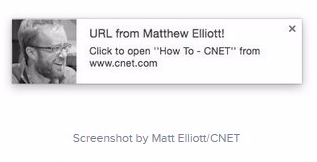

…and that’s it, a few plinky plonky computer noises later, which you can hear here, and your Tone compadres will receive a notification, with your profile image attached, asking if they would like to open the link:

Is It Weird, Though?

I’m not 100% sure of the technical ins and outs of a service such as this, however once the service is activated, the microphone on each device is also activated.

“Google Tone collects anonymous usage data in accordance with Google’s Privacy Policy”

Google Tone and its usage fall within Google’s existing Terms & Conditions for privacy, but that in itself could mean a number of things.

What will they listen to? How much information is stored? What is it used for? Potential can of worms…

There are also valid security concerns, and I for one will not be sharing any sensitive or personal information while the extension is enabled.

Teething Problems

Chromecast is already using a not dissimilar technology, though it is beyond our hearing range. Apparently the first version of Tone was more efficient but far less aurally pleasing!

The idea for Tone is based in human speech, so it can have the same limitations. For example, not all devices may pick up on Tone’s broadcasts, depending on a number of variables such as how far apart the devices are, angles, room acoustics, and so on, just like human vocal interactions.

Google Tone is still in the experimental stage, and you may find compatibility issues, as well as problems with Tone being able to hear itself if there is background noise, but for a ‘first draft’ it’s looking pretty good.

What’s Next?

Google Tone is far from unique, there are a number of apps performing a similar function already in the space; but this is Google. In my humble opinion they have more scope, time and budget to take this further than any close alternative.

One competitor is British based Chirp, which shares photos, web pages and contacts using similar technology. Apple have their AirDrop system too, so we shall see how Google go against their behemoth of a competitor.

As problems with the extension are ironed out and various excitable engineers play with Tone into the dead of night, there are many more potential uses for Google Tone yet to be seen. The possibilities are vast, and with enough time and budget Tone may develop to let us share more, do more, and create more in the workplace and beyond.

Have you tried Google Tone? Let us know how it worked for you in the comments…

Post from Laura Phillips

Yahoo to Stop Maps and Pipes This Summer

AdWords Offers Updates to YouTube Brand Lift Insights

Google Buy Button a Reminder to Make Local Marketing Compelling

The Collateral Damage of Google’s Link Policy

Bing Adds Automated Rules to Streamline Campaign Management

Are Landing Pages Killing Your Conversion Rate?

Wednesday, 3 June 2015

SEO is no longer a skill you can just pick up: Training an SEO

Back in 2011, when I was first starting out in my SEO career; bright eyed, bushy tailed and spinning content like there was no tomorrow, I wrote an article on ‘How to Learn to do SEO’ and one of my very first sentences was:

Throw yourself in at the Deep End

SEO (or digital marketing, online PR etc) is not an industry where people sit down for 3 months, hold your hand and train you. And nor should it be.

I am now going to dedicate the rest of this article talking about how I was wrong.

Within my (admittedly completely subjective) experience, SEO managers are not always the best at training. We get our juniors in, give them some Moz resources, a small client and after a few short weeks expect results to start coming in. There’s a couple of reasons for this, firstly, because that’s how we learnt; scrappy SEOers armed only with Scrapebox, a reliable content writer on oDesk and an insatiable desire to achieve top ranking positions! We learnt through trying, testing, tweaking, failing and sharing our knowledge with others (hence the eruption of SEO being an industry where people will openly share and contribute their learnings to the wider community).

Secondly, because the fundamental basics of SEO really aren’t all the difficult to grasp (yet to prove this on my parents, however).

Thirdly, because it’s hard to train something so fluid and changeable. Tactics can alter monthly so any training programme that’s set up is likely to be out of date before the year is out. SEO is also largely dependant on personal preference as to how you go about it, there’s the basic things you need to do (have a site that is accessible, content that is relevant and pages that are authoritative) though the means of going about this differ hugely. Utter the word infographic at a search conference and walk out to “are shit!”, “great for small budgets!”, “spam signal!” and so forth. There is no mutually agreed consensus as to what good SEO tactics are so training them seems redundant and we often leave people to work things out alone.

However there’s a problem in this, in that SEO is no longer a single discipline, it’s not as easy anymore as tweaking some content and buying some links. While the fundamental basics of SEO really aren’t all the difficult to grasp, juggling multiple clients or projects, prioritising tasks and making the most of your time really can be.

For scalable, long term SEO there has to be a strategy, there has to be a process, there has to be a start, middle and desired end and when you’re new to the channel, this will require guidance.

Start with an assessment

When I was training SEO beginners, I found myself accidentally making assumptions about their knowledge of algorithms, or what a keyword was, or what a link was. When you live work in an industry in the way SEOs often do, going back to the absolute basics can be really tough and I’d end up wasting time pitching training at the wrong level. A potential solution would be to start out by asking juniors to fill out an assessment form to gauge a better understanding of their knowledge with questions ranging from the very basic to the slightly more complex and based on this assessment, you can provide staff with the right training.

Move on to Analytics

Knowing where to start, and what to prioritise, is one of SEOs main challenges. Where there’s a hundred different problems to fix and a hundred different opportunities to exploit taking all of this stuff and sitting down at your desk and just picking something small to do is a challenge.

One of the most invaluable skills to develop within your SEO weaponry (I regret typing that) is the ability to find something tactical and actionable that will have an impact on performance and just do it.

Analytics; be it GA and GWT, Omniture, WebTrends etc needs to be trained but not simply ‘you can see traffic here’ and ‘this is where you find conversions’ but more, ‘this is how you find pages that need content improving’. There’s a nice starter guide on Google Analytics Help.

The Landing Pages report shows the URLs to your website that have generated the most impressions in Google Web search results. With this report, you can identify landing pages on your site that have good click through rates (CTR), but have poor average positions in search results. These could be pages that people want to see, but have trouble finding.

Excel at Excel!

Invest in proper excel training for your staff. Having an advanced knowledge of Excel will save reams and reams of time and is an investment that will more than pay off in the long run. I was fantastically bad at Excel for a very long time (mainly because I was always listening to Slipknot in GCSE IT) and I lost considerable time manually doing things that had I known, Excel would have just done for me. It’s important to remember that Excel is nigh on impossible learn alone, as without knowing it’s capabilities, you easily miss out on faster ways to do things.

Anybody working in an SEO team (be that within outreach or technical) will significantly benefit from being proficient in pivots, IF functions, LOOKUPS, RAND and loads more. There’s a nice article on every Excel for SEO here.

Save tools for later

Tools are great; they save us time, help us define processes and scale, however they are not a great start point for a total newcomer to SEO. Simply because, you need to understand the manual process first. For example, a friend was training one of his team on outreach and he taught her to manually build lists, find influencers using Google advanced queries and how to write a good, tailored email.

It was only after a few weeks of her doing this that he said, “okay, so here’s Scrapebox where you can harvest search results, here’s how to mail merge, here’s how to find influencers using Followerwonk/Buzzsumo/Linkdex/etc”

She was annoyed he’d let her do all of that work manually, but he thought it was important she understood the actual process initially, before getting to grips with how to speed it up.

Get expert training from other channels

You know who’s really good at link building? PRs.

Invest in training from PR or creative experts to instill good outreach habits from the start. Depending on your particular flavour of SEO, establishing the mindset of relationships first and links second is likely to get better results longterm.

Create test sites

Learning on the job is still the fastest way to learn, however it can be difficult to do this on a client or brand site. When I first started out I was so afraid of messing up or doing something wrong that I struggled to anything. Create some test sites (and crucially some time!) for your juniors to play on. Better still, create some budget for your juniors to create some test sites themselves, walk them through setting up hosting, integrating WP etc. This is an excellent resource for absolute beginners.

You can also use these as test grounds to run some research to create blog posts or whitepapers on.

Test and Assess

Find sites that have a lot wrong with them (send me a DM and I’ll give you some ;)) and use these to continually assess the level of the team – what did they find? What did they miss? Where are the gaps in the knowledge?

Using the same site means they can also track their own progress and acknowledge how much they’ve progressed.

Ultimately, the main thing you need to do when training an SEO is make time. It’s easy to get bogged down in client work, pitches or other demands but setting significant time to train someone effectively is never time wasted.

My last comment in that blog post I wrote those years ago however still remains very true, and that is:

Nobody knows everything

There is not a single person in the SEO industry who knows absolutely everything, so don’t be afraid to admit your faults and weaknesses.

Post from Kirsty Hulse

YouTube’s First Decade: The Top Ads and the Trends That Define Them

How to Generate Content Ideas Using Buzzsumo (and APIs)

Posted by Paddy_Moogan

Content is an important part of any digital marketing strategy. Whether it be content for links, conversion, education, search or any number of things. In this post I want to focus on one area—content that drives awareness of your brand.

One tangible way to measure awareness is by looking at new visitors that a piece of content attracts which is pretty easy (although not always perfect) using something like Google Analytics. There are other measures that can influence new visitors that I'm going to talk about as well:

- Social shares

- Links

Both of these can send traffic to your website, although I wouldn't assume that they correlate exactly. This should explain why.

Nonetheless, chances are that if you're creating a piece of content that is designed to create awareness, links and social shares are likely to be high up the list of objectives, just below traffic.

But where do you start? What content idea will work best?

If you haven't already, I'd highly recommend you take a look at this deck from Mark Johnstone who is one of the best people I know when it comes to explaining what makes a good idea. Follow it with this post from Hannah Smith just last week on Moz on creative content research.

In this post, I want to explain one of the processes that we use at Aira to generate content ideas for clients. It's not the only thing we do, but it gives us a massive push in the right direction, even in industries that may be tagged as "boring" or hard to understand. The reason for this is that we gather as much information as we can on what has already worked in a particular industry. We use an awesome tool called Buzzsumo to help with this, and I'm going to show you three core things:

- How to use the Buzzsumo tool (free and paid versions)

- How to hack together a Google Doc to automate some of the work for you and pull in additional metrics

- How to scale it up and create a tool that does the hard lifting and adds in other magic too

This is exactly the process that we've gone through at Aira. We started using the Buzzsumo tool as it was intended, then I wanted to get a bit more from it so I played with the API, then we saw the potential and built a proper tool that scales and pulls in other data. Each time we do a content strategy for a client, we tweak the process and usually add something new. So this is a work in progress but even right now it is working well.

The process is very data-driven and we don't try to hide the fact that we're learning from what others have done. The fact is that there is a wealth of data out there ready to be used to inform our decision making and the choices we make—so we should use it.

If you're already familiar with Buzzsumo, feel free to skip to the section on using the API to see what else you can do with it.

The goals of content profiling

Before diving into Buzzsumo itself, I want to be really clear on what we're trying to achieve through this process. It can be a long process (more on speeding it up later) and you can go down a few rabbit holes before getting the insight or takeaway that you're looking for. So keep the following in mind as you step through this.

Overarching goal: Gather as much information and data as possible to feed into your brainstorming / content ideation process. So rather than just starting with a blank slate, you have lots of research behind you which can make your ideas a little bit better and allow you to make more informed choices.

This goal is important to remember because this process won't magically give you fully formed content ideas, but it will feed into that heavily and lead to better content ideas and crucially, probably make you ditch some ideas that may not be so good!

There are a few secondary goals that help set the context for what we're doing:

- Learn what is working well in your industry

- Learn what your competitors are doing well

- Learn what social networks are best for you to target

Keep these in mind as you go through this process.

Using Buzzsumo for content profiling

To make this post more useful and easier to follow, let's imagine we're working with a client in the travel space and we've been tasked with coming up with content ideas for them. They are looking to get in front of their primary audience which is mid to high-end travellers.

Now let's use Buzzsumo to try and see what is working in terms of content in this space that may appeal to this target audience.

There are two main ways for us to use Buzzsumo to find this information:

- Keyword based search

- Domain based search

We tend to use both types of search but it's worth mentioning that occasionally, domain based searches are limited because client competitors may not be doing that much in terms of content creation—so there isn't much for Buzzsumo to find! This is usually the exception though and even smaller websites have done some content at one point or another.

Keyword-based research using Buzzsumo

Let's start by looking at a few keyword-based searches.

One thing we need to be aware of at this point is the difference between commercial keywords and content-driven keywords. Our travel client may be targeting keywords such as:

- luxury holidays

- last minute luxury holidays

- luxury honeymoon destinations

Putting these into Buzzsumo will return some results, but the usefulness of them will not be as good as using content driven keywords such as:

- luxury travel guide

- best honeymoon destinations

- guide to getting married abroad

These keywords are non-commercial in nature and therefore, the results we get back from Buzzsumo will be for content created using these keywords and not product / service pages that list holidays. Overall, Buzzsumo does a pretty good job of filtering out pages that are 100% focused on selling products / services, but a few do slip through sometimes.

You can search Buzzsumo using quotation marks if you want to narrow down results a bit, but we'll start off broad with the following search:

I've also selected "Past Year" from the left hand side so that I stand a good chance of getting a good set of results with lots of data.

Here are what the results look like:

We can see the results ordered by total number of shares, then broken down by each of the large social networks too. You can dive into each result to view backlinks to that content and Twitter accounts that have shared that content too:

If you're using the free version of Buzzsumo, then you can continue to run searches like this and doing a deep dive into pieces of content that catch your eye. Over time, you will start to notice trends and be able to take some actions based on what you see. Here are a few that we find most useful.

Seeing which domains appear the most

After only a few searches, you will start to notice domains appearing over and over again for your keywords. Just in the one screenshot above, we can see that A Luxury Travel Blog appears twice in the top few results. The first thing you should do is make a note of this domain so you can use it to do deeper research a little later.

Secondly, the important point here is that this domain clearly has a decent size audience because of the way it is consistently getting good amounts of social shares across multiple posts. This means that this domain could be a good one for you to take a closer look at and try to build a relationship / run a campaign with them.

Uncovering influencers who you can reach out to

After a few searches and deep dives, you will also see patterns in who appears the most when you click on "View Sharers" button. You should make a note of these people and again, start looking for ways to engage with them because you know they're relevant to your niche and they are open to sharing content that you're able to create.

Find the common themes / topics of content

The final thing that we tend to try and pick up on is the themes of the content titles too. After several searches, you will start to see what typically appears in the top results for your set of keywords.

Once you've done all of this, you should have a few things in your notes:

- Ideas on what content themes perform best - you can use these to start your own ideation and brainstorming.

- A list of domains that tend to get lots of shares - you can do a deeper dive on their content (see next section) and look to build relationships with them to help you promote your future content

- A list of influencers on Twitter who tend to share relevant content - you can try to contact these people and see if they'd be open to sharing the content you create too

Now let's move from a keyword-based search to a domain-based one.

Domain-based research with Buzzsumo

In the same way that you can run keywords through Buzzsumo, you can also run domains through it and find the most shared content on that domain. This is really useful when you want to do a deeper dive into domains that kept appearing in your keyword research, as well as taking a closer look at competitors to see if they are doing anything you can learn from.

One thing to note here is that sometimes you won't get a great set of results back from Buzzsumo. This tends to happen in smaller niches or on particularly new domains where Buzzsumo may not yet have enough data to be useful.

Remember earlier that A Luxury Travel Blog kept appearing on our keyword searches? We can now take a closer look at them with a simple search:

The results look similar to a keyword search but as you can see, the results are limited to this domain:

Again, we can take a deeper dive and see what's working for this particular domain. Just a glance at these top four results alone give me two insights:

- Posts that appear list focused / have a number of items on them appear to do well with the top three all being lists

- The same author has written the top two posts, which means we can take a deeper look at what she is writing and try to work out why this is

We can take a closer look at her content by clicking on her name:

We can then do a bit of digging and try to find trends and insights that may help us when coming up with our own ideas. It may also turn out that she has a big social following which makes her someone you may want to engage with.

All of the above is available using the free version of Buzzsumo, which is great. The paid version lets you go a bit further in a few ways though, and given that pricing currently starts at $79 per month, I think it's a reasonable cost and achievable for most people. So I want to talk about what you can do with the paid version too. Later, we'll go a step beyond this and look at what you can do with the paid API as well.

Deep-dive content keyword analysis

When you run a standard keyword search like we did above, you need to keep an eye open for results that seem very useful, relevant, and have lots of results. When you find this, you can use Buzzsumo to do a bit deeper into this keyword and give you all sorts of useful data about the content produced around that keyword.

You can do this by using the Content Analysis report:

You run a keyword search as normal and Buzzsumo takes a little longer than usual because it's generating a much bigger report which includes a number of things. I won't go into detail on every single one here, but I will give you my favourite sections.

Average social shares by network

This tells us which networks typically generate the most social shares for content that mentions "luxury travel guide." For most B2C industries, the split between networks above is pretty normal, whereas you tend to see a much higher number for LinkedIn when it comes to B2B industries.

The interesting thing here, though, is not so much the split of shares between networks—most of us could guess that Facebook and Twitter would drive the most shares. What is interesting is the average number of shares because this can really help us get a sense of what is achievable with this particular type of content.

In the case above, we can see that, on average, a piece of content mentioning "luxury travel guide" gets about 40 Facebook likes and about 30 tweets. If your client or your boss is expecting 100,000 of each from your new piece of content, you can show them this data and it can help set their expectations a bit. The fact is that many pieces of content don't get more than a few dozen social shares, but we only see and remember the ones that get hundreds of thousands and that is where our expectation is set.

Average shares by content length

This one doesn't really drive massive insights, but I want to include it because it can be a great one for driving home a point. The point being that you can't just throw up a few hundred words of content and expect it to fly. It can happen, but for most of us, we need to invest time and resources into content creation, and long-form writing and guides can lead to lots of social shares.

Whether people read articles that they share on social is another story, but that's for another day. The message here is that typically, content that is more in-depth and detailed will get more social shares. I've seen the odd exception when doing research for clients, but generally I'll see the graph above over and over again.

Most shared domains

I really like this section of the analysis because it effectively becomes my outreach target list. Buzzsumo is telling me that when it comes to my target keywords, these are the domains that usually get the most shares. Therefore, these are exactly the domains where I want my client to be featured in some form or another. What Buzzsumo is doing here is a bit like what Richard Baxter talks about in this post on outreach where you are trying to be super targeted and not just going after random link targets.

Average shares by content type

Total honesty here—this one can be a bit hit and miss. Generally, the more broad the keyword, the better results you get in this graph. The example above isn't bad, but isn't great either. What Buzzsumo is doing here is analyzing the articles that it's crawled and trying to work out what type of content it is. In this example, they've been able to figure out what there are "How to" style articles, lists and videos related to luxury travel and it has given me the average social shares for each.

To give you a better example, here is a recent search I ran for another Aira client, I can't tell you the exact keyword but this was the result:

As you can see, there are far more content types and this gives us a good idea of what types of content can perform well. The interesting thing for this particular client was the disproportionate number of LinkedIn shares for "How to" articles which informed our research and gave us a path to go down to find out what was happening. We were able to take a closer look at this content and use this to make recommendations to the client.

There are other bits of data available in this deep dive report but typically, these are the ones I find most useful.

Deep-dive domain analysis

Just like with a basic search, you can switch out your keyword and put in a domain instead and ask Buzzsumo to do deeper analysis on it. Let's take a closer look at Lonely Planet and see what data we can get that may help with content ideas for our travel client.

We get the same set of core graphs that I've mentioned above, but the takeaways and actions can be a little different when performing domain research. Particularly if you're running a competitor through it.

The graph above shows us that Lonely Planet is driving a disproportionately high number of social shares through Facebook compared with any other platform. It would probably be wise for us to take a closer look at their Facebook page and see exactly what they are doing.

An additional graph from Buzzsumo which I didn't mention above is this one:

This is another one that can be hit and miss, but in this case, it is pretty useful because it gives us a rough idea of what topics drive the most social shares. There are a couple in there which aren't very useful such as "travel", "planet" and "us". But the rest can help inform our ideation going forward and give us angles to research a bit further.

What is interesting about the graph above is the disproportionate number of shares on Pinterest that content focused on Italy gets, it gives us a route to go down to learn more and perhaps get some insights into our content process.

You can do this kind of analysis for competitors, too, and see what content is getting the most social shares, as well as what social networks are working best for them. At Aira, we were able to show one client how well Facebook was working for a number of their competitors which led them to hire a social media person into their team who could focus on this channel and grow the brand presence with their target audience.

All of the reports above can help you with the process of coming up with content ideas, whether you use the free or paid version of Buzzsumo, you'll be able to get good insights and learn from competitors / the general industry to see what has worked before and feed it into your ideation.

Taking it a step further: Using the Buzzsumo API

Spoiler alert: If you have a Buzzsumo API key, just make a copy of this spreadsheet, input your key and all of the stuff below will magically work.

We used the above process a few times at Aira and it works well, but we wanted something a bit more powerful and quicker. It can take a while to run these reports, especially if you have lots of keywords and domains to check. I had a quick look at the Buzzsumo API and saw that it used JSON which is something I'd worked with before in Google Docs, so I decided to try to hack something together that could eventually be developed into a tool—if it worked.

For me, as a non-developer, that was a big if!

I wasn't looking to create anything fancy or scalable though, I just wanted to build something that worked and could be used as a prototype for building a "proper" tool—more on that below or you can jump ahead.

I did start off with a few simple things in my mind though—the problems I was trying to solve by using the API and not just using the app itself. Given that some development time is needed to build this tool, I need to have good reasons to invest the time. For me, I had a few good reasons:

- I wanted to run the tool for lots and lots of keywords and lots of lots of domains at the same time. Buzzsumo can do this, but you still have to type in the advanced search query. I just wanted to copy and paste keywords / domains into a single field and hit go! This is even more important to us because of the way we gather keywords and domains which is covered in the next point.

- We use an internal tool based around the SEMrush API to gather keywords from client competitors and it would be super cool and quick to pass the output from this tool straight into the Buzzsumo API.

- We have another tool that tells us who a client's true organic search competitors are, and similar to the previous point, it would be super useful to pass these competitors straight into Buzzsumo for processes and to give us lots of cool data about their content.

- We wanted to add in additional metrics that Buzzsumo doesn't give us for each URL such as Moz metrics, Majestic metrics and sentiment / demographic analysis

- Buzzsumo doesn't give you "live" numbers when it comes to social shares, so if we used the API, we could integrate with the Shared Count API to recrawl the URLs and get fresher social numbers.

These reasons were enough for me to explore the API a bit and see if it could help solve these problems.

For those of you who actually know what you're doing when it comes to coding / APIs, etc., please don't scrutinise my work too much! I'm what you'd call a copy and paste coder and I'm sure there are cleaner / more efficient ways to do what I've done. Remember that the point here is to build a prototype that could be scaled into a full tool.

You will need an API key from Buzzsumo to do any of this work and it comes with their agency plan which isn't super cheap, but is very cost effective for agencies who are doing lots of this kind of research.

Let's start with the basics of the Buzzsumo API and what you can get from it.

There are five main ways of getting data out of Buzzsumo:

- Top content - returns the most popular content for your chosen domain or keyword

- Top influencers - returns influencers for the topic of your choice

- Article sharers - returns a sample of people who shared a specific article on Twitter within the first few days of it being published

- Links shared - returns a list of links shared by an influencer of your choice

- Average shares - returns the average number of shares for content published in the last six months from a particular domain

We're going to focus on the first one for "Top Content" as this follows on nicely from the steps I've already outlined above. I'll talk about the other options for the API in another post :)

You can view the documentation for the Top Content section of the API here. The request API looks like this:

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

Let's break this down and see what is happening.

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This is the URL we need to access to API and the bit at the end tells Buzzsumo which of the five types of API we want to access and the format we want the data to be returned in.

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This should feel familiar to you because it matches the options you get in the Buzzsumo App:

Changing this section of the string allows us to focus our research if we want to. So if we only wanted to see results where Buzzsumo has classified a piece of content as an infographic or a video, the string would look like this:

http://api.buzzsumo.com/search/articles.json?infographic,video&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

Generally, we tend to not change this bit of the string as we want as many results back from Buzzsumo as possible.

The next part of the API call is this bit:

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This is where we define the domain or keyword that we want to run research for. Obviously this will change for each search you do based on what you need.

Next up in the call, we have:

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This simply tells Buzzsumo how to order the results. By default, this will be set to total and the results will be ordered by the total number of social shares that a piece of content has. If you'd prefer the results to be ordered by something else, you can switch out for Facebook, Twitter, Pinterest, etc.

Next in the call is:

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This tells Buzzsumo how far (in days) we want to go back to get data. We typically set this to 360 which is the maximum amount of time you can go back.

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This tells Buzzsumo what page of results to start at, with 0 being the first page.

http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE

This is where you set your unique API key which allows you to run these requests.

Integrating with Google

Now that we know what the API request looks like and what we can play with, let's look at how to pull this into Google Sheets.

Fortunately (for non-coders like me) there is a cool function in Google Sheets called =importjson. It's similar in many ways to =importxml which you may have read about before.

This function doesn't work out of the box, unfortunately, you have to go through a couple of simple steps to install a script first, but it's really easy and well explained in this blog post on Medium.

Once you've done that, you can start by performing a simple API call using the structure I've shown you above.

Simply paste the following into a cell in your Google Sheet:

=ImportJSON("http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com&result_type=total&num_days=7&page=0&api_key=API_KEY_HERE")

After a few seconds, you'll see something like this appear:

Yay! Data! It's amazing when stuff just works.

Once you've pulled the data in for your chosen domains or keywords, you can do whatever you want with them. At a basic level, you can simply build an API call for each of the domains and pull the results into different tabs in your Google Sheet. So you'd end up with tabs that look something like this:

From here, you can use the built in Google charts to visualise the numbers you find and compare competitors to each other.

What is more interesting, is running a single API call and getting data back for several competitors or keywords at once. To do this, you simply use an advanced search in your API call. Continuing with the same example above, we'd edit our API call so that it says this:

=ImportJSON("http://api.buzzsumo.com/search/articles.json?article,infographic,video,guest_post,giveaway,interview&q=lonelyplanet.com OR wanderlust.co.uk OR cntraveller.com&result_type=total&num_days=360&page=0&api_key=API_KEY_HERE")

The results now look like this:

What can we take from this?

Well, Lonely Planet is kicking ass because they own 19/20 of the most shared articles. The only exception is Wanderlust who appear in position 9.

We can also take all of the content titles and put them into a word cloud tool such as Wordle and get something like this:

You'll need to remove a few keywords such as the brands and common words that you'd expect, but the output can give you a good overview of the content themes being pushed out by your chosen domains or keywords.

But remember one of the problems I was trying to solve? I wanted to be able to drop in a list of keywords and competitors and have Buzzsumo fetch the data, rather than having to type it all in manually in the app.

I managed to get to the point where I could do this:

The eagle-eyed among you will notice there are a few hidden rows in this screenshot. I'll unhide them in the next screenshot so that I can show you what is going on and how you'd replicate what I've done above.

Remember I showed you how to build the API call above? I'm using Google Sheets below to build an API call and am simply using the =concatenate function to do so. In the following screenshot, I've used this function to concatenate my list of domains and keywords into the standard format for a Buzzsumo advanced search:

From here, I can use concatenate to build my API call. Cell A13 simply concatenates A8, A9 and A10. I'm know there are more technically efficient ways to do this using scripts, but as I said, I'm not a developer and I'm just trying to prove a concept here as quickly as I can.

My output is a list of the top 20 results from Buzzsumo, ordered by total shares from all three domains and two keywords. I can take this data and run whatever analysis I want on it to find what content seems to perform best in terms of social shares and feed this into my content ideation process.

Adding in live social shares

One of the goals of using the Buzzsumo API rather than the web interface was to pull in up to date social share numbers for each URL we find. Unfortunately, Buzzsumo gives us social data that isn't always up to date. They fetch social data for each URL at different intervals and generally update older URLs less than new ones.

Luckily, pulling social share data into Google Sheets is really easy.

All you need is a free API key from Shared Count and the script from this blog post on Render Positive. Make a copy of the Google Sheet from Render Positive, then follow these steps.

1) Go to Tools > Script Editor

2) Click on this option:

3) Make a copy of the script:

4) Go back to your own sheet and click Tools > Script Editor

5) Click File > New > Script file

6) Paste in the script you just copied and save the script

7) Finally, you'll need to edit this cell reference to the cell where you plan on pasting your API key from Shared Count:

That's it!

Now if you want to use the script, you just need to enter the following in the cell of your choice along with the cell reference for the URL you want social shares for. Here are the five that Buzzsumo use:

=SharedCountTwitter(A1)

=SharedCountFBTotal(A1)

=SharedCountLinkedIn(A1)

=SharedCountPinterest(A1)

=SharedCountGPlus(A1)

You'll end up with something like this:

Or, you can of course just make a copy of this spreadsheet and everything is already set up for you!

So after all of this, I ended up with a Google sheet that did the job for me and solved most of my problems with using the Buzzsumo web interface. After a couple of successful client projects using this process, we decided to build out a more powerful and scalable version of the tool. The additional bonus being that it was less likely to break than my cobbled together Google sheet!

Building out your own tool using Buzzsumo

Importing data into Google sheets is one thing, but it only takes us so far and doesn't tend to scale particularly well. To overcome that we have the option of building our own piece of software that uses the principles I've mentioned above to move things to the next level.

In this example our programming language of choice is PHP. As above, there are a few options that you pass into the API (including they keyword or domain, page number, number of days and your API key) so the overall principle is the same. Here's what we do:

Firstly, we build the Buzzsumo API call and save the URL into a variable:

http://api.buzzsumo.com/search/articles.json?q='.$url_or_keyword.'&result_type=total&page='.$z.'&num_days='.$numdays.'&api_key='.BUZZSUMO_API_KEY;

We then use that to grab the data and store it in another variable:

$json = file_get_contents($buzzsumo_url);

Finally, we take that data and translate it:

$response = json_decode($json);

Moving on from there, we take the response and pull out the data for each URL:

foreach($results as $result) {

// Get the URL & number of social shares, and then save them into a database

}

The important bit there is that we save each of the results into a database because that means we can take a massive data set for numerous domains and keywords and then look for trends.

To show what can be done we took an example set of 7 sites that we're going to run through our profiler. The process, and results, can be seen in the video below. This is just a quick example of what can be done, with some time and imagination, you can do a lot with the Buzzsumo API and mash it up with other APIs to get a really cool data set.

As you can see, within a couple of minutes we can look at the top content for each of our chosen sites, as well as see which networks are most effective for them. We've also built in an export function that easily allows us to drop the data into Excel and do a manual analysis of the results. You may have also spotted that we've added in Moz and Majestic data which allows us to do analysis along the line of what Buzzsumo recently did in this post.

That's about it! In summary, the standard version of Buzzsumo can help you get great insights to feed into your content ideas and strategy. If you have the time and you're working across lots of clients, it's definitely worth taking a look at the paid version and making use of the API if you (like us) wanted to mash up the data with other APIs.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

Tuesday, 2 June 2015

Beyond Links: Why Google Will Rank Facts in the Future

How To Hire A Great Link Builder

Pinterest to Add Buy Button for Revenue Generation

Repurposing Unsuccessful HAROs

I had one of those “I can’t believe I didn’t think of this earlier…” moments a few days ago, concerning HARO requests.

I had one of those “I can’t believe I didn’t think of this earlier…” moments a few days ago, concerning HARO requests.

Sometimes getting a comment out of a client (rather than ghost-writing one for them, which I’m personally not a fan of doing) for a HARO request – or another type of PR request – can be like getting blood out of a stone. And when they’ve gone to the effort of doing it – and it’s a few hundred words rather than a sentence or two – but it doesn’t get published, it can be a real shame.

But it doesn’t have to go to waste… Why not reuse it?

In this post, I’ll talk you through my process and approach to repurposing HARO requests. But first of all…

What is HARO?

If you’re not already familiar with it, HARO (Help A Reporter Out) is like a matchmaking service between marketers/PRs and journalists/bloggers. HARO send out three emails everyday containing requests from journalists who are after information, tips or an interview on certain topics, and marketers – on behalf of their clients, or themselves – can pitch to them with answers to their queries. Here’s a few random examples from a recent email:

HARO has been widely used by some big players in recent months, including the BBC, Mashable, the Huffington Post, the Independent, the New York Times, Forbes and Fast Company to name a few from recent months. You’ll find that it tends to be more US-focused, but even so, I’ve gotten some good results out of it for my UK-based clients. You may often see niche-specific requests as well: for example, I’ve gotten my parents’ IT recruitment agency featured on Monster (the big job site) a few times, which is an ideal placement, both in terms of authority and relevancy. There are other similar types of services out there, such as SourceBottle, which is less US-focused (as many of its requests also apply to the UK, Ireland, Canada, Australia and New Zealand).

In a nutshell, I love HARO – so much so that I wrote a ‘how to’ guide here.

The long, hard slog…

Getting clients to buy into HARO can be tough. If they go to the effort of providing a few comments to requests and not many of them get successfully published, it may put them off the process entirely. Sometimes it’s the luck of the draw as well: one of my clients manages to get every single one of their HARO requests published, while for another client it took numerous attempts before their first one went live.

When I ask clients to respond to HARO requests, I ask them to provide “a few sentences/a paragraph or two” when contributing, but sometimes they get carried away: one once provided 3-400 words, while another provided more like 7-800…!

The lightbulb moment hit the other day with one of my clients who took the effort to write 3-400 words, which is fair to say is a blog post-sized contribution. Their contribution was really good, but I noticed that the post went live with someone else’s comments instead. It was at that moment that I realised that rather than let it go to waste, they could flesh it out and use it as a blog post on their own blog.

Of course, the tricky thing with HARO is that your client’s response may go live without the requester taking the time to let you know that it has done. This could potentially mean that you might repurpose and reuse the comment but be none-the-wiser that it’s already out there, risking duplicate content creation. Therefore I advise following a couple of steps if you want to make sure that you don’t accidentally end up duplicating it…

Step #1 – Wait…

The best thing to do first of all? Be patient. I don’t know about you, but I’m an extremely impatient person, so this is often the most frustrating part…!

I recommend giving it 1-2 weeks – maybe even a little longer – after the deadline before doing anything with it at all. That should be ample time for the requester to have published the post from the response(s) that they’ve received.

You can also set up alerts to let you know in the meantime (more on that in the next step).

I actually recently contributed to a HARO request on the subject of how bloggers can get more inbound links to their blog to boost its SEO. I’m still waiting before I make my move, and I haven’t heard anything back just yet, but if it’s unsuccessful then I plan to repurpose it – either as a guest blog post for a blogging advice blog or on my own blog – as it came to 354 words in the end…

Step #2 – Checking to see if it’s been published or not

Once you’ve waited a few weeks and you’ve not heard back, rather than assuming that it’s been unsuccessful, it’s probably best to just double-check that your client’s contribution has not been used.

If the HARO requester revealed what website it’s for, you can go to the effort of checking their blog/article section and try to find the post. This will be infinitely trickier however if the requester decided to put themselves down as ‘Anonymous,’ in which case…

You can also use an alerts notification system on your client’s brand name and URL, which might notify you if they’ve been mentioned in a blog post. I have alerts set up for all of my clients, and while it’s not foolproof, I’ve been notified of a few successful HAROs this way. In my experience, Google Alerts has been terrible for a while now, so these days I advise using other alert notification providers – I tend to use a combination of Moz’s Fresh Web Explorer and Talkwalker.

If you know of any others out there that are good to use then please leave a comment below.

You could even go as far as setting up a separate, custom alert on the HARO request’s title, which might end up being the article’s title as well. Or even take a few phrases from your client’s contribution and set up an alert on that portion of text. It might be a bit overkill doing this with every single HARO, but if there’s one that you’re really keen to repurpose – if it’s a killer response – then it might be worth going to the effort.

Step #3 – Fleshing it out and publishing it yourself

When you’re as sure as you’ll ever be that it hasn’t gone live, now is the time to act. If it’s a little on the short side, it makes sense to flesh it out a bit. You might find that you’ll need to write an intro and conclusion at the very least, so you’ll find that you’ll be fleshing it out and expanding its word count in the process anyway.

And voilà: you have a blog post that you didn’t have before, the majority of which was ready and raring to go (rather than writing it entirely from scratch). And your client will be happy that they didn’t waste their time – especially if they’re proud of what they said.

You can even be creative: why settle for a blog post when you can turn it into something else? Something like a video or (dare I say it) an infographic, or another type of content entirely? As the old recycling slogan goes: “the possibilities are endless”…

So don’t let a rejection get you down – or let it go to waste. Repurpose it and use it for yourself.

[Recycle Your Ideas image credit: emilegraphics]

Post from Steve Morgan

Monday, 1 June 2015

Uber Partners With Foursquare to Combine Local Search and Transportation

Mobile Surpasses Desktop in Search Queries

Uber's Partnership with Foursquare Gives a Further Advantage in Local Search

Why are you here?

It sounds like a philosophical question. And in some cases it probably is. In this case it’s an honest question though: we really want to know, why are you here?

And with here we of course mean, on State of Digital. What makes you come to this website, what makes you come to a platform like State of Digital in the first place? Are you looking for new learnings? News maybe? Or maybe something else?

With the start of the new month (can you believe it’s June already!?) we figured that this month we would ask you that question in our poll. The poll is available on every page in the sidebar!

Did you see we’ve been polling the visitors of State of Digital the past few months? You can still see (and answer) the polls here. It will give you a nice insight into who is actually visiting our website.

What we’ll do with it? Well, quite simple: we want to improve our content, make it better fit for you! With your answers we can do just that! So tell us, why are you here?

Post from Bas van den Beld

Facebook's PGP Email Encryption Will Anonymize Email

Google Now on Tap Makes App Search Optimization More Critical Than Ever

Linkophobia: Fear of Link-Building

Search marketing insights: Bing Ads makes the holidays work for you

I may be a digital marketer but I must confess: I am French before all. As such, my summer holidays are close to sacrosanct, on par with the Tour de France, tartiflette and a good shrug. So as the warmer months close in, I like to stay on top of tourism trends, especially when they entail international travels…

As a matter of fact, due to the US dollar strength in recent months, travel operators and those in the hospitality industry are expecting an influx of American tourists gracing foreign climes this summer. According to Choice Hotels, the year started with a positive outlook for U.S. travellers because of low employment and petrol prices seeing the promise of 8% increase in leisure travel and a 5% increase in spend per trip. To help businesses capture this opportunity, my Bing Ads colleagues in the U.S. have compiled a comprehensive guide to the what, why and how in travel.

The 2015 Travel Tips deck hosted on SlideShare includes some stellar stats and trends they’ve uncovered from third parties and goes on to deliver some actionable PPC insight gleaned from hours of data mining across the Yahoo Bing Network by our Bing Ads research team. We are also hosting a webinar which will dig into the specifics of the European travel search habits and opportunities.

Here are some of my takeaways from the data we gathered on both sides of the pond:

Plan Campaigns Around Twin Peaks of Summer and Winter

Let’s start with the obvious: travel-related searches peak in time for summer and winter travel. June to August for summer and December to January for winter. Advertisers need to remember though, that searching starts before those peaks in the research and consideration phase of the purchase funnel.

This seasonal bias is particularly true for North America where the amount of holidays is limited and travel tends to be concentrated. In Europe however, we are seeing some hills raising between the peaks. These micro-trends are dictated by school calendars or by the desire for experience-seeking Millennials to optimise their time-off between two bank holidays. We can for instance see the months of April and March emerging as a new booking season, especially around short stays to sunny destinations.

OTA/Aggregators: Take advantage of high CTRs

The click-through-rate for all OTA/Aggregator related searches is massive! Bing Ads data shows it hovering between 11% and 16%. For smartphone searches, the CTR is even higher – between 14% and 19%. These high CTRs show consumers are happy to click on a travel ad especially if its ad copy is enticing, seasonal or has some kind of price-driver behind it.

With high CTR and low CPCs, OTA/Aggregator advertisers should consider bidding on their own brand terms in order to prevent losing mindshare and conversions to competitors bidding on theirs.

Some other research from Bing Ads on brand bidding has shown that bidding on one’s own brand terms results in higher click yield, along with a decrease in the click yield for competitor ads. In other words, brands get more clicks and keep competitors at bay. The research also found that bidding on the most prominent ad spot (“main line” or ML1) is particularly important for smaller advertisers.

Target Last-Minute Hotel Bookers

Astoundingly over 50% of searches on hotel mobile sites happen within a week of travellers actually showing up at the destination. Taking this into account marketers looking to reach these last-minute bookers should update campaigns and ad-copy to reflect price time-sensitivity or a speedy booking process to capture their attention above the competition.

Remember to Plan Multi-Device Campaigns

6 in 10 leisure travellers use search engines to plan their trips (eMarketer, Business Travel 2.0, Nov. 2014), and they plan, research and book travel across multiple devices. In the UK, 39% of the travel related queries are done on a tablet or a smartphone for instance. It’s critical that advertisers meet travel searchers wherever they are – whether that’s a PC at work, tablet at home, or smartphone on the road. Campaigns and ad copy can be tailored for PC/tablets and smartphones to engage consumers as they plan their vacation and research destinations and local attractions.

Get Ready for the Mobile Majority

The Yahoo Bing Network saw a 43% year-over-year increase in the number of smartphone searches related to Travel, and we expect that number to keep growing. With 58% of travel spending occurring during a trip, it’s important for local advertisers to have mobile ads ready to capture nearby travellers.

Adding Location and Call Extensions to your PPC ads will drive relevant traffic to your mobile (or mobile-friendly) website, storefront, or reservation line, allowing consumers to easily contact you to learn more or make a reservation.

Advertisers with mobile apps can also add App Extensions to encourage mobile travellers to install their apps. By optimising their mobile campaigns, advertisers will be able to target relevant audiences and better connect with leisure travellers.

Inspire travellers to stay longer

Americans often search for longer vacations than they book. And yet, the average American carried over 3.3 paid vacation days from 2014 to 2015. That’s $56.6 billion worth of paid vacation days!

Advertisers can use ad copy and length-based special offers to inspire travellers to stay longer and use an extra paid vacation day or two.

The peak for travel searches might be upon us but this research shows there are plenty of opportunities to capture the attention of travellers, not just before and as they purchase their ticket, but during their travels while they’re on the ground as well.

I’ll be bringing you more international search insights in a regular column here on State of Digital every month, so please don’t hesitate to ask me a question in the comments below or ping me on Twitter @CEDRICtus

Post from Cedric Chambaz

Misuses of 4 Google Analytics Metrics Debunked

Posted by Tom.Capper

In this post I'll pull apart four of the most commonly used metrics in Google Analytics, how they are collected, and why they are so easily misinterpreted.

Average Time on Page

Average time on page should be a really useful metric, particularly if you're interested in engagement with content that's all on a single page. Unfortunately, this is actually its worst use case. To understand why, you need to understand how time on page is calculated in Google Analytics:

Time on Page: Total across all pageviews of time from pageview to last engagement hit on that page (where an engagement hit is any of: next pageview, interactive event, e-commerce transaction, e-commerce item hit, or social plugin). (Source)

If there is no subsequent engagement hit, or if there is a gap between the last engagement hit on a site and leaving the site, the assumption is that no further time was spent on the site. Below are some scenarios with an intuitive time on page of 20 seconds, and their Google Analytics time on page:

|

Scenario |

Intuitive time on page |

GA time on page |

|

0s: Pageview |

20s |

20s |

|

0s: Pageview |

20s |

10s |

|

0s: Pageview |

20s |

0s |

Google doesn't want exits to influence the average time on page, because of scenarios like the third example above, where they have a time on page of 0 seconds (source). To avoid this, they use the following formula (remember that Time on Page is a total):

Average Time on Page: (Time on Page) / (Pageviews - Exits)

However, as the second example above shows, this assumption doesn't always hold. The second example feeds into the top half of the average time on page faction, but not the bottom half:

Example 2 Average Time on Page: (20s+10s+0s) / (3-2) = 30s

There are two issues here:

- Overestimation

Excluding exits from the second half of the average time on page equation doesn't have the desired effect when their time on page wasn't 0 seconds—note that 30s is longer than any of the individual visits. This is why average time on page can often be longer than average visit duration. Nonetheless, 30 seconds doesn't seem too far out in the above scenario (the intuitive average is 20s), but in the real world many pages have much higher exit rates than the 67% in this example, and/or much less engagement with events on page.

- Ignored visits

Considering only visitors who exit without an engagement hit, whether these visitors stayed for 2 seconds, 10 minutes or anything inbetween, it doesn't influence average time on page in the slightest. On many sites, a 10 minute view of a single page without interaction (e.g. a blog post) would be considered a success, but it wouldn't influence this metric.

Solution: Unfortunately, there isn't an easy solution to this issue. If you want to use average time on page, you just need to keep in mind how it's calculated. You could also consider setting up more engagement events on page (like a scroll event without the "nonInteraction" parameter)—this solves issue #2 above, but potentially worsens issue #1.

Site Speed

If you've used the Site Speed reports in Google Analytics in the past, you've probably noticed that the numbers can sometimes be pretty difficult to believe. This is because the way that Site Speed is tracked is extremely vulnerable to outliers—it starts with a 1% sample of your users and then takes a simple average for each metric. This means that a few extreme values (for example, the occasional user with a malware-infested computer or a questionable wifi connection) can create a very large swing in your data.

The use of an average as a metric is not in itself bad, but in an area so prone to outliers and working with such a small sample, it can lead to questionable results.

Fortunately, you can increase the sampling rate right up to 100% (or the cap of 10,000 hits per day). Depending on the size of your site, this may still only be useful for top-level data. For example, if your site gets 1,000,000 hits per day and you're interested in the performance of a new page that's receiving 100 hits per day, Google Analytics will throttle your sampling back to the 10,000 hits per day cap—1%. As such, you'll only be looking at a sample of 1 hit per day for that page.

Solution: Turn up the sampling rate. If you receive more than 10,000 hits per day, keep the sampling rate in mind when digging into less visited pages. You could also consider external tools and testing, such as Pingdom or WebPagetest.

Conversion Rate (by channel)

Obviously, conversion rate is not in itself a bad metric, but it can be rather misleading in certain reports if you don't realise that, by default, conversions are attributed using a last non-direct click attribution model.

From Google Analytics Help:

"...if a person clicks over your site from google.com, then returns as "direct" traffic to convert, Google Analytics will report 1 conversion for "google.com / organic" in All Traffic."

This means that when you're looking at conversion numbers in your acquisition reports, it's quite possible that every single number is different to what you'd expect under last click—every channel other than direct has a total that includes some conversions that occurred during direct sessions, and direct itself has conversion numbers that don't include some conversions that occurred during direct sessions.

Solution: This is just something to be aware of. If you do want to know your last-click numbers, there's always the Multi-Channel Funnels and Attribution reports to help you out.

Exit Rate

Unlike some of the other metrics I've discussed here, the calculation behind exit rate is very intuitive—"for all pageviews to the page, Exit Rate is the percentage that were the last in the session." The problem with exit rate is that it's so often used as a negative metric: "Which pages had the highest exit rate? They're the problem with our site!" Sometimes this might be true: Perhaps, for example, if those pages are in the middle of a checkout funnel.

Often, however, a user will exit a site when they've found what they want. This doesn't just mean that a high exit rate is ok on informational pages like blog posts or about pages—it could also be true of product pages and other pages with a highly conversion-focused intent. Even on ecommerce sites, not every visitor has the intention of converting. They might be researching towards a later online purchase, or even planning to visit your physical store. This is particularly true if your site ranks well for long tail queries or is referenced elsewhere. In this case, an exit could be a sign that they found the information they wanted and are ready to purchase once they have the money, the need, the right device at hand or next time they're passing by your shop.

Solution: When judging a page by its exit rate, think about the various possible user intents. It could be useful to take a segment of visitors who exited on a certain page (in the Advanced tab of the new segment menu), and investigate their journey in User Flow reports, or their landing page and acquisition data.

Discussion

If you know of any other similarly misunderstood metrics, you have any questions or you have something to add to my analysis, tweet me at @THCapper or leave a comment below.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!